PROJECT CASE STUDY

Increase college coach engagement

TL;DR - For this project, we needed to increase college coaches' interaction with athletes. We determined that the problem was that athletes were too hidden from college coaches. We decided to test elevating the visibility of the Discover Athletes Tool by removing a piece of UI blocking the athlete, changing its positioning, and making athlete videos more accessible. Once we launched the test we saw an immediate 30% increase in athlete video views but an unexpected 15% decrease another important engagement metric. After some data investigation to determine that overall there was still an 8% increase in athlete premium upgrades. We successful increased college coach engagement! We decided to persevere and make iterations to the new feature!

My role for this project: Product Manager & Product Designer

Business Goals & Objectives:

Goal: To increase college coaches' engagement with athletes

Objectives:

Maintain or increase the quality of coach/athlete matches

Leverage Athlete videos (highest conversion rate)

Keep project within a 6-week timeframe

(If you’d like to view My Product Process in detail click here)

Journey Mapping

Journey mapping is an integral part of our discovery process that we regularly leverage throughout the product cycle to track the experience flows of the target users. We document every touchpoint along the area of emphasis, from emails and push notifications, feedback loops, core and secondary calls to actions, and areas of opportunity for bouncing. In our discovery work, we will use this map as a framework for filling in user-collected emotions and interaction data.

For this project, it was essential to document all the paths a college coach was getting to the Discover Athletes area, whether from email and push notifications, the home feed, or another location.

Problems & Questions Brainstorm

All cross-functional groups (PM, Designer, Engineering, & Data) come together for a collaboration session where we share possible problems and questions that we have. We have a healthy debate of hypothesis and questions have the highest priority for product discovery.

Top Problem Hypothesis: The recruitable athletes are too hidden from the college coaches

Top Questions: Will improvements to the Discover Athletes area have a higher impact than making improvements to the home feed?

Problem & Questions Discovery

Our data and engineering team members begin looking at impressions and unique visits on both the Discover Athletes and Home Feed. While the PM and Designer start making user feedback calls to college coaches. We also used Google Analytics to view the usage of the current quick filter screen on the Discover Athlete area that blocks the list of recruitable athletes.

We learn that the Discover Athletes area is only visited by 1/3 of all active college coaches but its athlete interaction rate is 2x that of the Home Feed. We also learn through our feedback calls that some coaches were not even aware that a database of recruitable athletes exists. We verify our hypothesis with a survey to a group of 200 coaches.

Define Problem Statement, Success Metrics, & Personas

Problem Statement: The recruitable athletes are too hidden in the Discover Athletes area from college coaches

Success Metrics:

Increase the number of unique visits to the Discover Athletes area

Increase the activity amount and rate of college coaches watching athlete videos

Increase the quality of coach/athlete matches

Project completed in 6-week timeframe

3 main personas are created based of the most common cases from the user discovery calls and surveys.

We checked in with our stakeholders and got the green light to continue forward.

Conduct Brainstorm Session

As a group, we begin to list potential solutions for the problem:

Create a feed item on the home page to direct to Discover Athletes Tool

Remove the current quick filter screen

Create an automated email campaign that will push college coaches to recruitable athletes

Move the entire recruitable athletes list to the very front of the experience (Home feed area)

Sketching & User Flows

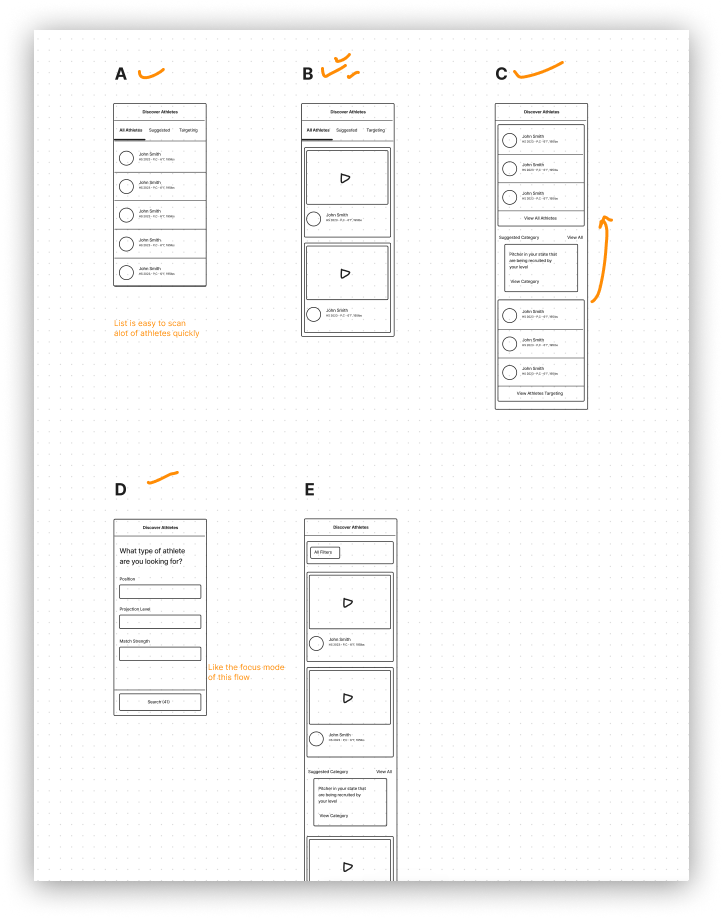

Once we’ve collected our ideas from the brainstorming session, the designer will start sketching concepts and user-flows. Sketches range from “Dream-state”, full functionalities and dream experiences, “Nice to have” partial somewhat functionality, to “Must-have” MVP functionalities and a shortcut experience. It’s essential to stay at a low fidelity so that the team and stakeholders can focus on the idea and not the UI interactions and pixels. We want to present a few options for the team to review as visuals generally help progress the decision-making forward.

Competitive & Comparative Analysis

This analysis is generally happening in tandem with the sketching and concept phase. Documenting how the competition or a comparative company is solving a similar problem is a valuable step towards understanding product similarities and differences. It is helping resources during the decision-making process.

Choose Solution Hypothesis & Test

We ask the engineers for a feasibility check of each solution idea so that we can create an Effort/Impact matrix for the whole team to review. Though moving the entire recruitable athletes list to the Home screen may dramatically increase the visibility of the athlete list, we determined we were not willing to sacrifice the Home feed engagement, as well as a huge technical effort. Removing the current quick filter screen on the Discover Athletes area was a quick win that we felt also could have a sizable effect. We checked in with our stakeholders and got the green light to continue.

Our test plan is to dark-release the 1st version of the new Discover Athletes tool and send a small segment of college coaches the new feature. We select an MVP solution with a few "nice to haves” included. We want to update the athlete cards to include athlete videos as well as display the coach evaluation, both of which are buried within the athlete profile. The results of the test will dictate if we need more iterations of the tool, if it’s ready to be announced to all users, or if it’s failed and we need to scrap the test.

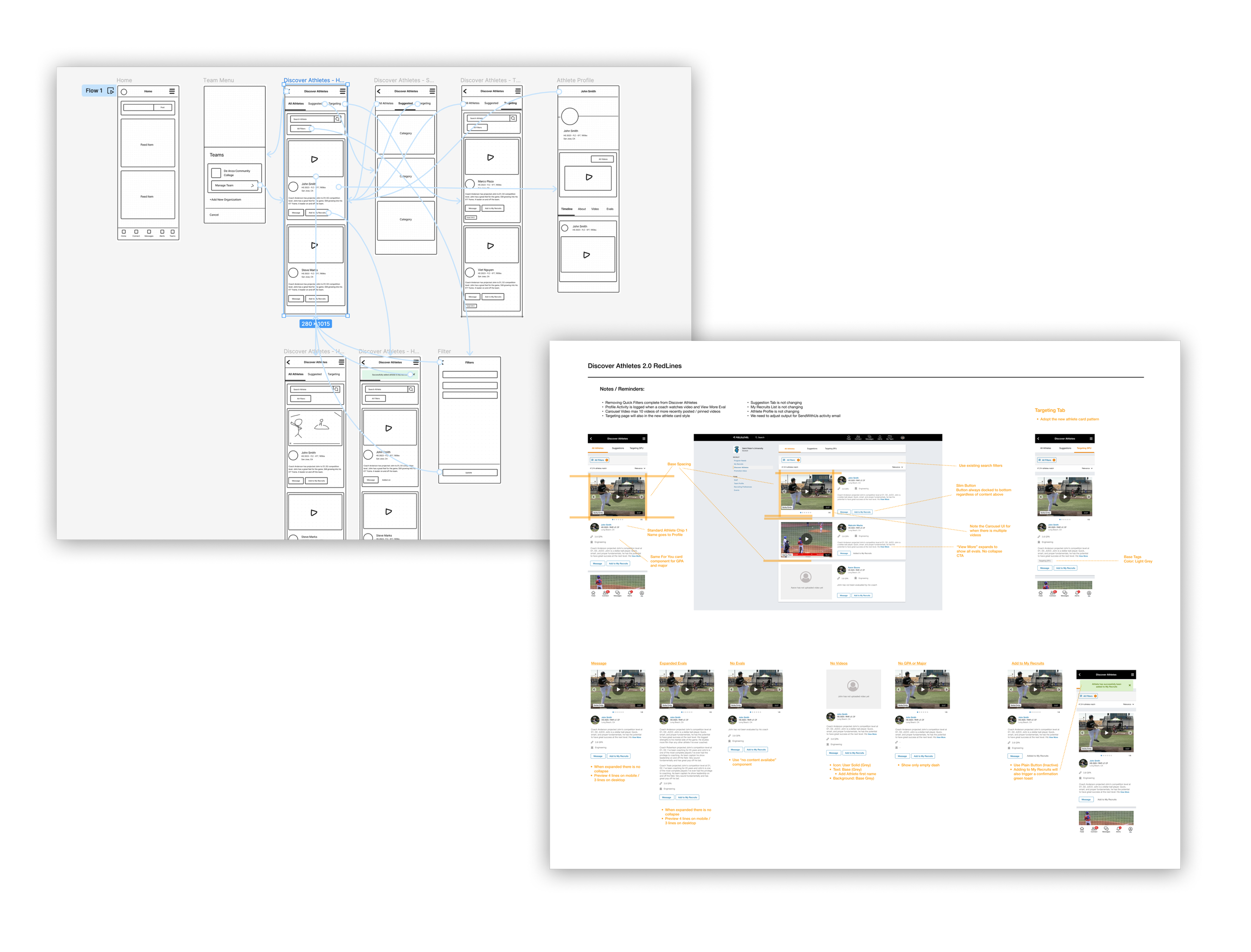

Wireframes & Prototypes

Once a solution hypothesis has been selected, it is time to take the feedback and notes from the sketches and user flows and bring it to wireframes and prototypes. We start to incorporate more UI details, content, and flow scenarios in preparation of user-testing.

User-testing, Data Application, & Iterations

We start putting together our user test plan and reach out to our internal team members and user groups to run through a few different scenarios with the prototype. We take that feedback and make iterations to the wireframes and document them to be shared with the team.

After a few iterations, we prepare for a “state-change” design review. We start to build cases through user-feedback and data analysis for solutions we want to propose in our design review.

Design Reviews

We begin all our design reviews, reminding the group of the project goals and objectives, the defined problem, and the proposed solution direction. We want to make sure that we make clear what we are trying to accomplish with the particular design review, whether it be a “state change” or a more specific UI/UX/content review. We briefly overview our findings, relevant data, or user feedback and then walk through the proposed flow. Details such as a rollout plan or implementation details are generally not included during this review. We are either given feedback and asked to do another round of iterations or are given the green light to move forward.

Hi-fi Specs & Deliverables

Once stakeholders give the green light, we prepare the development deliverables. These consist of redlines outlining specific components and patterns, states, notification triggers, dynamic data points, and API calls that must be made. An updated journey map and prototype are also included for reference. Once it’s ready for a handoff, the designer will meet with the developers and PM to review the deliverables and provide additional context.

Pre-launch Communications, QA, & Tracking

Once we have a game plan for our test we make sure that we notify the company on what we’re hoping to test and what changes can be expected. We set up a meeting with the Customer Success team to give a demo of what’s to come in case they need to field any customer service issues. There also is coordination around updating any FAQs or help documentation.

We run a couple of rounds of QA testing and log a few errors and cosmetic changes.

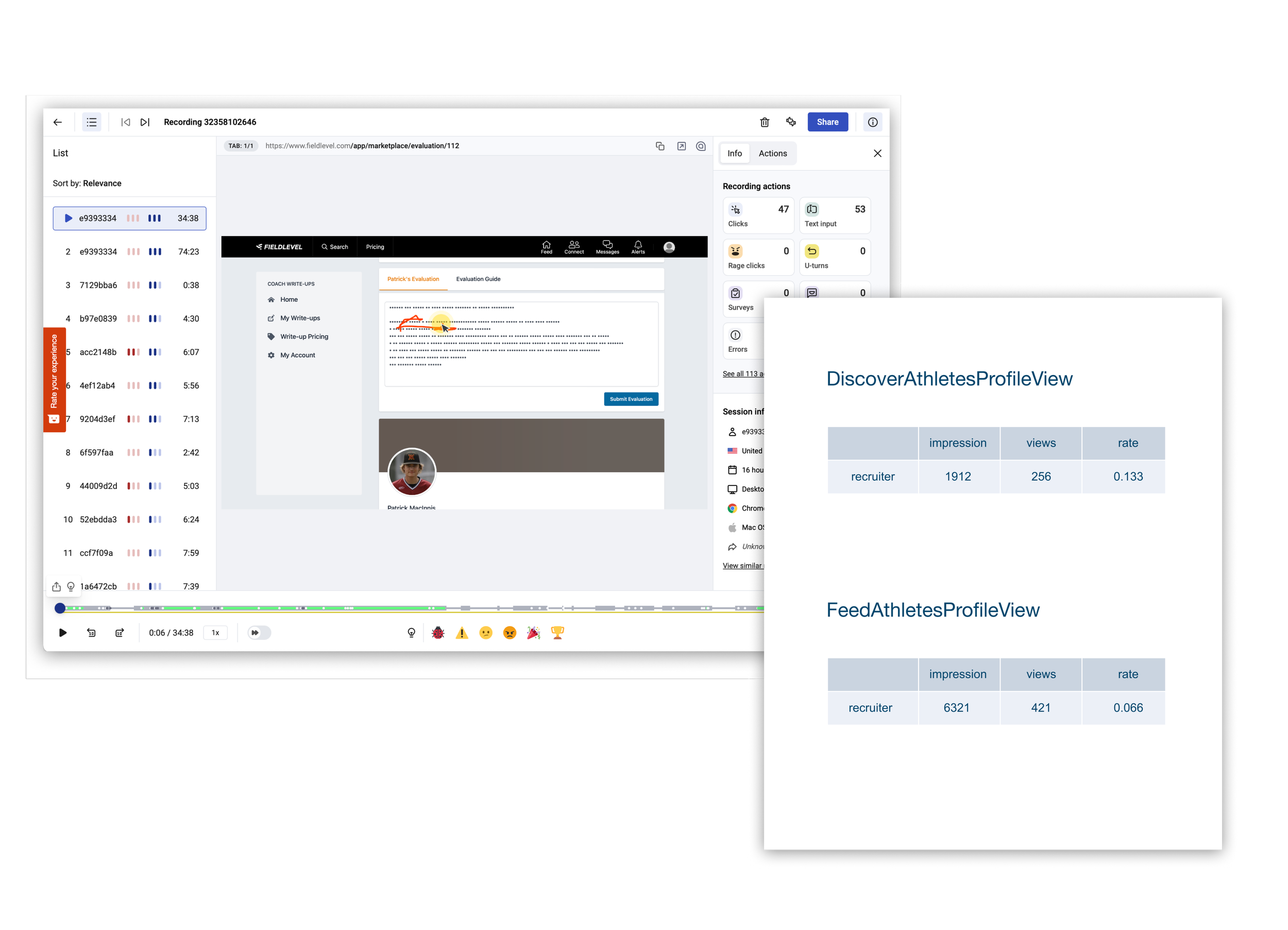

We also set up our interaction recording software (Hotjar) so that we can observe user interactions and discuss any visible changes or experience failures that may need to be immediately fixed. Because we are also testing our new CTA positioning we add Google Analytics click tracking.

Launch & Post-launch Communications

We deploy and the test is live!

We inform the company of the release. The team has a mini-celebration!

We start working on the next item on the priority list concurrently.

Review Test Results & Make Decision

We generally will let the test run for about 3-5 days before we review the data. What we observed was an immediate 30% spike in video viewed by unique college coaches. But there was also an unexpected behavior happening with these new changes. Since athletes and their videos were more visible and accessible it decreased the need for coaches to click to view the athlete’s profile. Thus athlete profile views began to decrease by 15%.

We needed to immediately investigate if the increase in video views and decrease in profile views was going to be beneficial or a detriment to the company. Our data analyst was brought in to review this situation. After investigating the data we found a 8% increase in athlete premium upgrades despite the decrease in profile views. This was great news! We also observed that the college/athlete match rate per profile visit has increased 10% as well.

At this point, we need to decide whether to Pivot or Persevere. All signals from this test were showing positive effects. Although it is still early in the test we can feel some confidence that we should persevere to the next iteration of the feature. We look at our priority list and start going through the process of figuring out what next to test.

Retrospective

We generally schedule our retrospective a week after the start of the next project.

What worked?

we were able to identify the problem quickly

we had a solution that increased our desired project metrics

What didn’t work?

we did not account for the potential of other important engagement metrics decrease. more risk assessment could have been done.

How can we improve?

for the next project, we should add a risk assessment process

Any conflicts that need to be addressed

No conflicts

More Projects

Exploring New Markets

Improving Recruiting Conversion

Media, Edits, & Rebrand

Passion Projects

Creating Growth Marketing Loops